Decoding MCP: A comparison between Model Context Protocol vs Rest API

Published:

The AI Isolation Problem: Why MCP Was Born

Picture a brilliant consultant locked in a windowless room. No internet, no documents, no tools—just raw intelligence. This was the reality of AI systems before MCP. Despite their astonishing capabilities, large language models (LLMs) remained trapped in silos, disconnected from the databases, APIs, and tools that could make them truly useful. Every new integration—whether fetching live data from PostgreSQL or automating Blender 3D modeling—required custom code, special prompting, and fragile plumbing. Developers faced an N×M integration nightmare: N AI models needing bespoke connections to M data sources.

Enter Model Context Protocol (MCP), Anthropic’s 2024 open-source breakthrough. Like USB-C for AI, MCP provides a standardized “port” for models to plug into any data source or tool. By early 2025, industry leaders like Block, Replit, and Microsoft Copilot adopted it, while 1,000+ community-built connectors flooded marketplaces like mcp.so. But why this seismic shift?

MCP Decoded: The “Universal Remote” for AI

MCP isn’t just another API—it’s a protocol for real-time, bidirectional dialogue between AI and tools. Traditional integrations force developers to hardwire each connection. MCP flips this dynamic:

Resources: Data streams (e.g., “file://sales.csv”)

Tools: Actions with side effects (e.g., “run SQL query”)

Prompts: Reusable workflows (e.g., “generate monthly report template”)

Sampling: Collaborative text transformations

REST API vs. MCP: A Paradigm Clash

REST API: The “Designated Driver” Model

Architecture: Request-response over HTTP (GET/POST)

Strengths: Maturity (Swagger, Treblle), statelessness, predictable CRUD endpoints

Limitations:

Rigid 1:1 endpoint-tool pairing (need new code per integration)

No dynamic discovery—AI must “know” endpoints in advance

Scales inefficiently (entire system scales for single-component needs) 38

MCP: The “Autonomous Agent” Enabler

Architecture : Bidirectional streaming over HTTP/SSE or STDIO

Breakthroughs:

Dynamic Tool Discovery: Servers advertise capabilities; AI selects tools at runtime

Stateful Sessions: Maintains context across multi-step workflows (e.g., “Pull sales data → analyze → email report”)

Unified Language: Tools describe functions in natural language (e.g., “fetch_customer_data(region: str)”)

Key Comparison

| Dimension | REST API | MCP |

|---|---|---|

| Integration Scope | Fixed endpoints | Dynamic discovery |

| Communication | Request-response | Real-time streaming |

| AI Autonomy | Low (predefined paths) | High (runtime decisions) |

| Tool Chaining | Manual orchestration | Automated workflows |

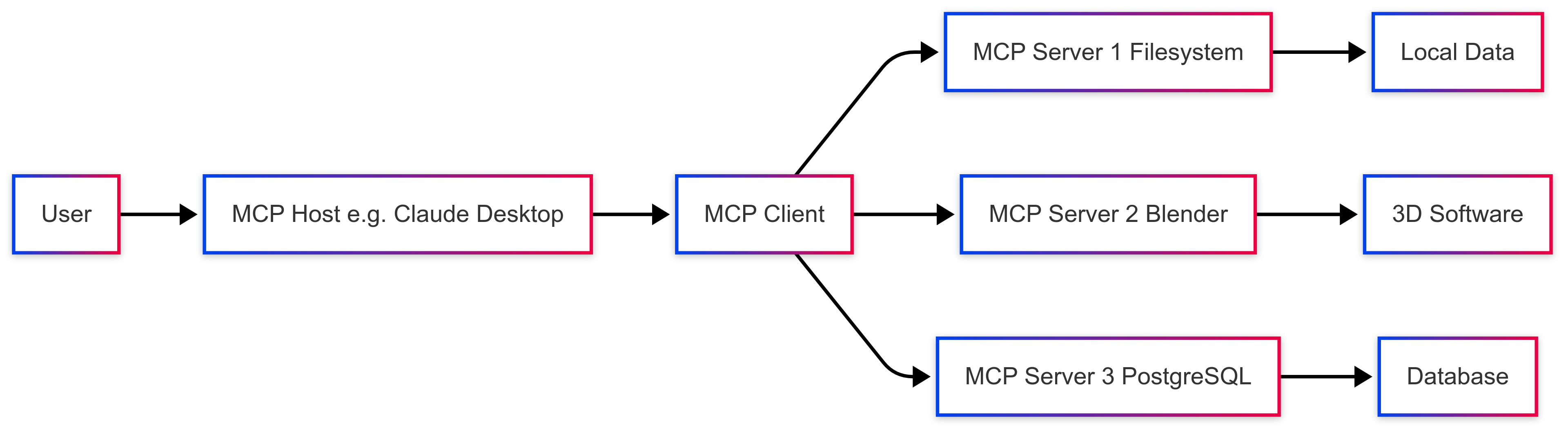

Anatomy of MCP: Hosts, Clients, and Servers

MCP’s power stems from its modular architecture:

MCP Host

- The user-facing AI application (e.g., Claude, Cursor IDE)

- Manages permissions, renders outputs, and orchestrates workflows

- Example: When you ask, “Summarize Q1 sales trends,” the host identifies needed tools

MCP Client

- Embedded within the host

- Maintains 1:1 connections to MCP servers

- Translates host commands into MCP protocol messages

MCP Server

- Lightweight adapters for tools/data (e.g., GitHub, PostgreSQL, local files)

- Exposes capabilities via:

- Resources: Data endpoints (e.g.,

file://reports/) - Tools: Executable actions (e.g.,

run_query(query: str)) - Prompts: Pre-built templates (

"/prompts/sales_analysis")

- Resources: Data endpoints (e.g.,

Real-World Flow

- User asks Claude: “Email Lena last quarter’s marketing spend.”

- Claude (Host) → MCP Client: “Find tools for ‘email’ and ‘spend data’.”

- Client discovers: Gmail Server (Tool:

send_email()) + PostgreSQL Server (Resource:marketing_budget) - Claude generates plan:

get_data() → summarize() → send_email() - Client executes chain via servers

Why MCP Changes Everything: Use Cases Unleashed

MCP transforms AI from a conversational novelty into an action-oriented collaborator:

🛠️ Developer Superpowers

- Codebase Exploration: Navigate repositories via GitHub MCP server

- Automated PR Reviews: Analyze diffs, suggest fixes

- Live Debugging: Run Python scripts via Docker MCP server while chatting

📊 Data Science Revolution

- Dynamic Analysis: Query Snowflake via SQL MCP server, visualize with Vega-Lite

- Report Generation: Chain prompts (

"/prompts/quarterly_report") + data tools

🤖 Autonomous Agents

- 3D Design: “Render a vase with marble texture in Blender” → MCP executes Python commands

- Supply Chain Optimization: Monitor SAP inventory + adjust orders

Case Study: Block (Square) uses MCP to connect internal finance tools. Agents now pull real-time metrics, generate audits, and flag anomalies—cutting report time by 70%.

The Road Ahead: MCP as the Nervous System of AI

MCP isn’t just a protocol—it’s the foundation for ambient computing. As servers proliferate (from Apple Notes to Kubernetes), AI will seamlessly blend into our tools. Early challenges remain: security for enterprise data, protocol evolution, and tool discovery UX. Yet the ecosystem is exploding: Cloudflare now hosts MCP servers, LangChain integrates them as tools, and marketplaces like mcpmarket.com offer plug-and-play connectors.

Conclusive Insight: MCP solves the “last-mile problem” for AI agents. Just as HTTP enabled the web, MCP will underpin the agentic internet—where AI doesn’t just answer questions but accomplishes goals. For developers, this means ditching custom integrations and embracing the universal connector. The era of isolated AI is over.

“MCP isn’t making AI smarter—it’s making it finally useful.”

— Nir Diamant