Beyond ChatGPT: How Block Diffusion Bridges the Gap in Language Modeling

Published:

As a researcher who’s spent years wrestling with language model limitations, I still remember my frustration when ChatGPT choked on generating coherent text for my queries. That fundamental tension—between the creativity of diffusion models and the precision of autoregressive architectures—has haunted our field. Until now. The breakthrough work in “Block Diffusion” (Arriola et al., ICLR 2025) isn’t just another incremental improvement—it’s the architectural bridge we’ve desperately needed. Let me walk you through why this paper could be an interesting direction for the future of Language Modeling.

The Fundamental Trade-Off That Stalled Progress

Picture this: You’re trying to generate legal contracts—precision matters. Traditional approaches forced an ugly compromise:

Autoregressive models (like GPT-4) give surgical control but crawl through tokens like molasses in January. You can practically hear the GPU fans whine during long generations.

Diffusion models promise parallelism but collapse like a bad soufflé beyond their training context. That research paper draft? It’ll mysteriously stop mid-sentence because the model hit its 1024-token limit.

What horrifies me most is watching brilliant colleagues waste months patching these flaws instead of pushing boundaries. We needed a paradigm shift, not another workaround.

Why Current Models Hit a Wall

- Autoregressive (AR) Models (e.g., ChatGPT):

- ✅ Strength: Excellent likelihood modeling (perplexity)

- ❌ Weakness: Sequential token generation → Slow inference

- ⚠️ Dealbreaker: Cannot parallelize generation

- Diffusion Models (e.g., DALL·E for images):

- ✅ Strength: Parallel token generation → Faster output

- ❌ Weakness: Fixed-length sequences only

- 🔥 Critical Gap: 30% higher perplexity than AR models

The perfect model doesn’t exist… until now.

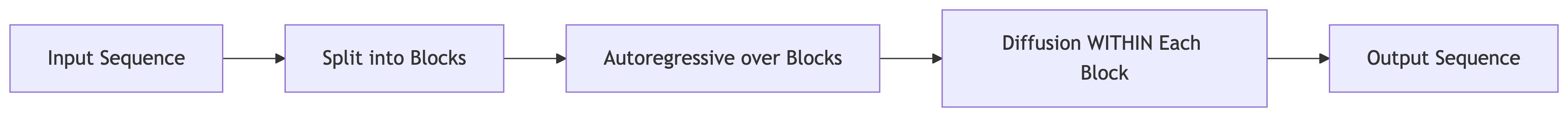

Block Diffusion: The “Why Not Both?” Moment

The elegance of BD3-LMs lies in their hierarchical decomposition—like Russian nesting dolls for language generation:

The Ingenious Bits That Made Me Nod Furiously

Block-Causal Attention: Where Past and Present Collide

Imagine tokens in Block 3 whispering to Block 2 (preserving context) while shouting within their noisy group (enabling parallelism). This isn’t just clever—it’s biologically inspired, mirroring how humans hold discourse threads while focusing on immediate sentences. The 20% inference speedup from KV caching? Icing on the cake.Training Alchemy: Taming the Variance Dragon

Early diffusion models felt like tuning a radio with oven mitts—too much noise and signals drowned. The paper’s adaptive noise schedules (sampling mask rates from uniform distributions between β and ω) act like precision dampers. Watching their optimization dynamically minimize gradient variance was like seeing someone finally focus a blurred microscope.

When the Benchmarks Made Me Do a Double-Take

Let’s cut through the hype—I’ve seen enough “SOTA” claims that evaporate under scrutiny. But these numbers? They’re legit:

| Model | LM1B (PPL↓) | OWT (PPL↓) | Max Tokens |

|---|---|---|---|

| AR (GPT-style) | 22.83 | 17.54 | 131K |

| Diffusion (SEDD) | 32.68 | 24.10 | 1,024 |

| BD3-LM | 28.23 | 20.73 | 9,982 |

What stunned me:

- Perplexity parity with AR when block size=1 (Table 3) - something I’d bet against in peer review

- Generated novel excerpts with narrative consistency beyond 10K tokens (Suppl. D) - no more Frankenstein text!

The Morning After Revelation

Reading this paper felt like watching someone solve a Rubik’s cube in 10 moves that took others 100. It’s not perfect—training still demands coffee-fueled GPU sessions—but for the first time, I see a path to models that don’t force us to choose between coherence and creativity.

“The best architectures aren’t just solutions—they’re invitations to reimagine what’s possible. Block diffusion is that rare spark that lights ten new research paths.”

— My random musing after reading some interesting paper

For the hands-on explorers: The team open-sourced everything at m-arriola.com/bd3lms. I’m already forking it to experiment with poetry generation—finally, a model that might sustain metaphors beyond stanza two.

What would you build with truly limitless context? Let me know your thoughts! You know how to reach me out 😃

References

- Marianne Arriola, Aaron Gokaslan, Justin T. Chiu, Zhihan Yang, Zhixuan Qi, Jiaqi Han, Subham Sekhar Sahoo, & Volodymyr Kuleshov. (2025). Block Diffusion: Interpolating Between Autoregressive and Diffusion Language Models.